Voltage Control based on ISS Neural Certificates

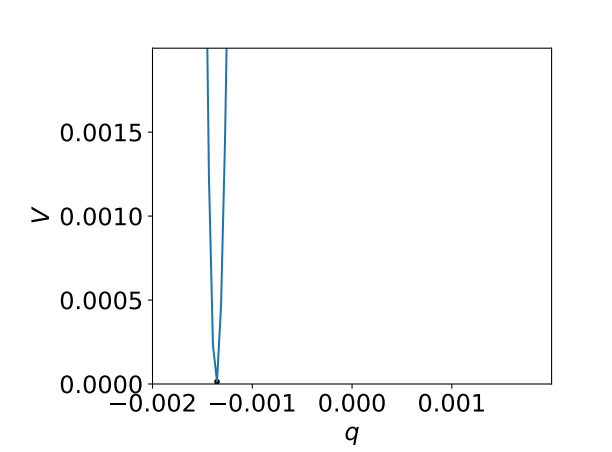

We Developed methods for stabilizing large scale power systems based on Input-to-State Stability (ISS) Lyapunov-based neural certificate, by treating a large system as an interconnection of smaller subsystems. Each ISS Lyapunov function of subsystem could be collected to prove the global stability of power system.

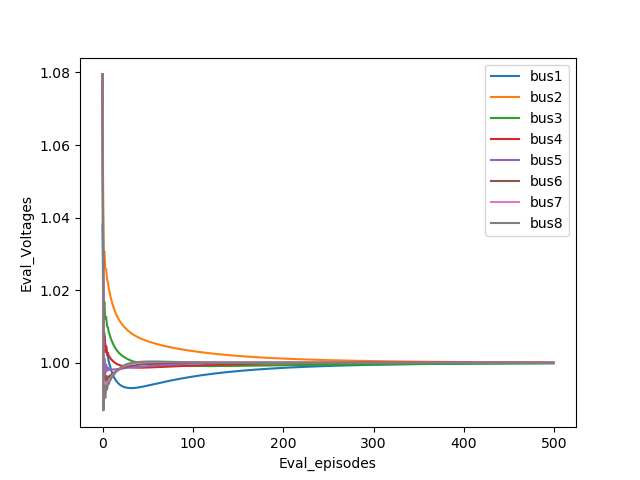

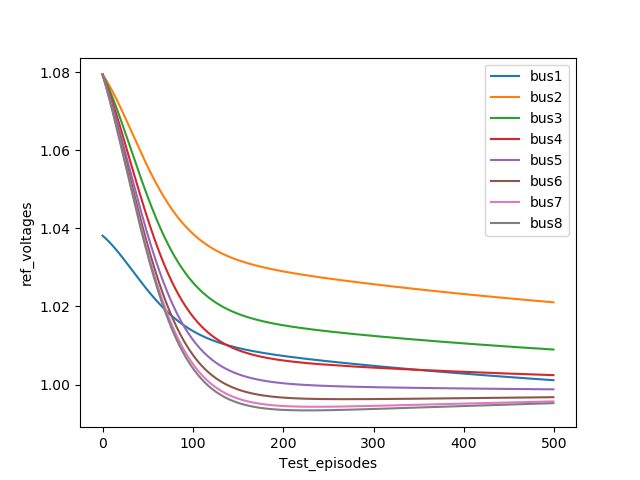

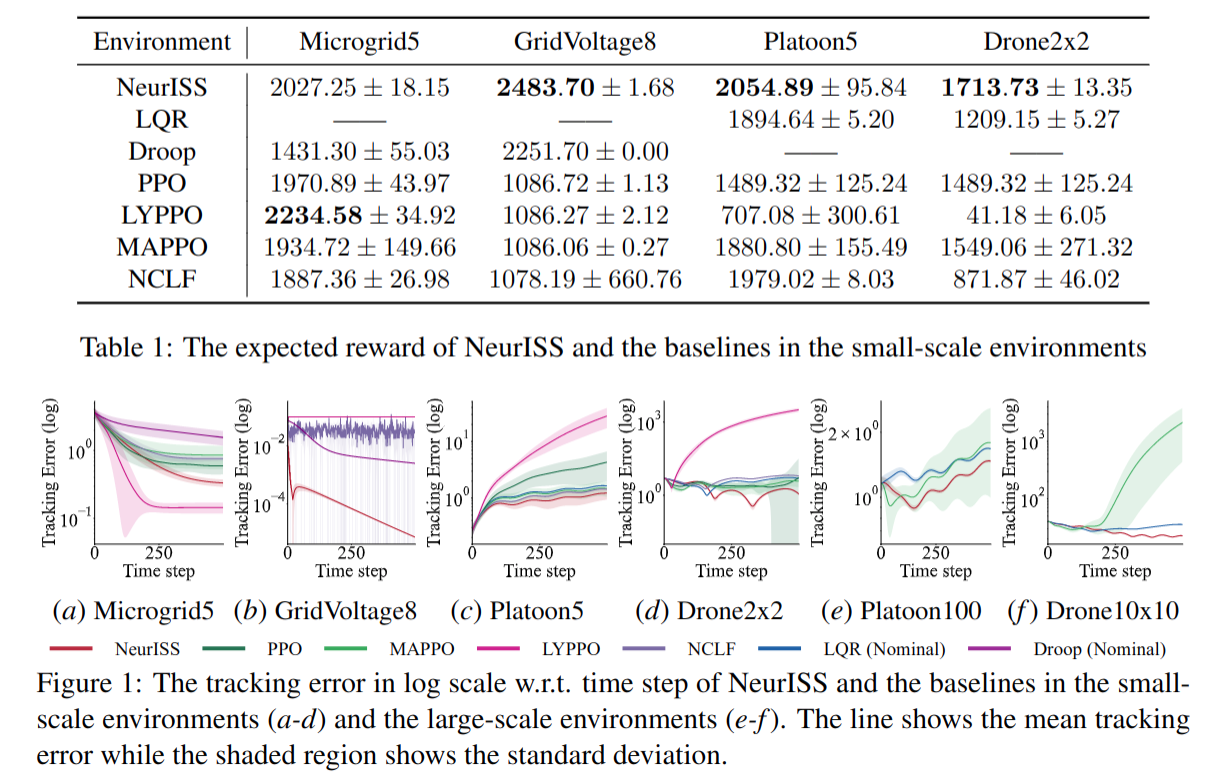

We demonstrate NeuralISS using three examples - Power systems, Platoon, and Drone formation control, and show that NeuralISS can find certifiably stable controllers for networks of size up to 100 subsystems. Compared with centralized neural certificate approaches, NeuralISS reaches similar results in small-scale systems, and can generalize to large scale systems that centralized approaches cannot scale up to. Compared with LQR, NeuralISS can deal with strong coupled networked systems like the microgrids, and reaches smaller tracking errors on both small and large scale systems. Compared with RL (PPO, LYPPO, MAPPO), our algorithm achieves similar or smaller tracking errors in small systems, and can hugely reduce the tracking errors in large systems (up to 75%).

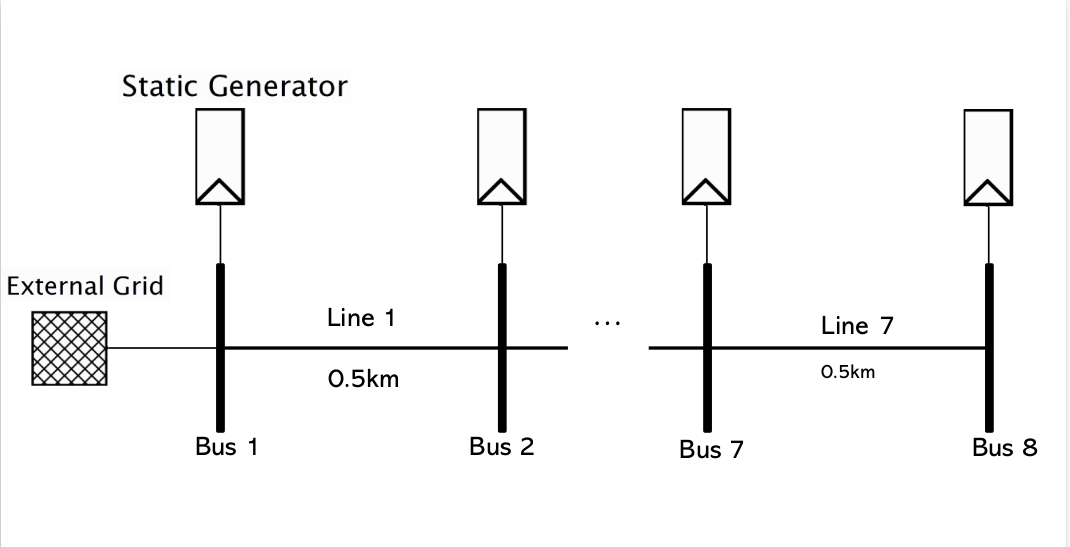

In our experiments, we consider a power distribution system that consists of 8 buses. The buses are arranged in a line, each one is connected to a static generator. The nominal controller is similar to the droop control and is a proportion controller on the voltage deviation, u_i(t) = c_i(v_i(t)-1) (where c_i is a constant). This proportion controller is a standard controller used in practice.

See the related Paper if you are interested.